Your teams are already building with AI. The question is whether your security program can see it.

AI code is shipping to production across your organization — new LLM integrations, API keys in config files, vulnerable ML dependencies in your dependency trees. These are real security risks, and the OWASP Top 10 for LLM Applications (2025) has made that official. But traditional AppSec tools weren’t built to find them. A prompt injection is a code issue. A leaked AI provider key is a secret. A vulnerable ML package is a supply chain issue. Today, these findings are scattered across separate modules — different triage queues, different severity models, no shared context.

We’re launching AI Security — a new, dedicated violation category in the Cycode platform that brings all of it together.

Why a Dedicated AI Security Category?

We built AI Security as a standalone category for one reason: AI risk is growing faster than any single scanner can cover, and it will keep growing.

Today, AI-related findings are generated by multiple detection engines — SAST catches prompt injection in your code, Secrets detects leaked API keys, SCA flags vulnerable ML packages. But no one is looking at the full picture. Your SAST team sees code issues. Your secrets team sees keys. Nobody sees your organization’s total AI exposure.

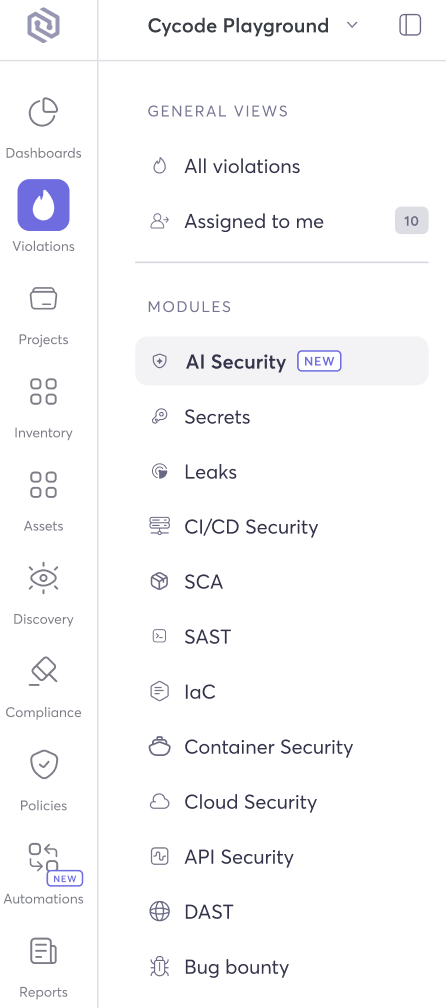

AI Security changes that. It sits alongside Secrets, SAST, SCA, Leaks, IaC, and the rest of your security modules as a first-class category — with its own detection policies, risk scoring, and triage workflows. Every AI-related finding, regardless of which engine found it, appears in one prioritized view. You can immediately answer the question that matters: how exposed are we to AI risk, and what do we fix first?

And this is just the starting point. As AI adoption evolves — new providers, new frameworks, new attack patterns — the AI Security category will grow with it. New policies and detection capabilities will automatically surface here, giving you a single place that always reflects your current AI risk posture, no matter how quickly the landscape changes.

Understanding Your AI Security Posture

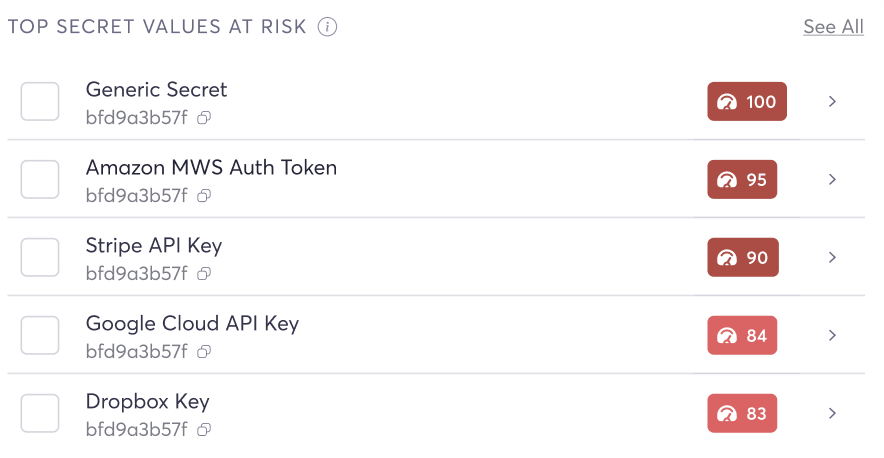

Beyond the violations list, the AI Security dashboard gives you an at-a-glance view of your entire AI risk surface — violations by risk and age, highest-risk AI packages and their vulnerabilities, top exposed AI secrets, and the projects and repositories with the greatest AI security exposure.

The dashboard enables security leaders and AppSec teams to quickly assess overall AI risk severity, identify where exposure is concentrated, track how long findings have remained open, and map risk to specific projects, repositories, and owners for remediation.

This level of visibility is only possible when AI-related findings are unified in a single view.

What’s Covered: Different Layers of AI Attack Surface Detection

The AI Security module aggregates violations from multiple policy types and detection engines — each targeting a different layer of the AI attack surface. Here are some of the key areas covered today:

-

SAST — LLM-specific code vulnerabilities. Deep semantic analysis of how your code interacts with LLM APIs.

-

Secrets — AI provider API keys. Dedicated detection for AI provider credentials across your codebase, config files, and pipelines.

-

SCA — Vulnerabilities in AI/ML dependencies. Identifies known CVEs in AI/ML dependencies.

-

Custom Policies — Organization-specific AI security rules. Build your own detection rules using Cycode’s Knowledge Graph to match your specific AI governance requirements, from shadow AI inventory to compliance gates.

-

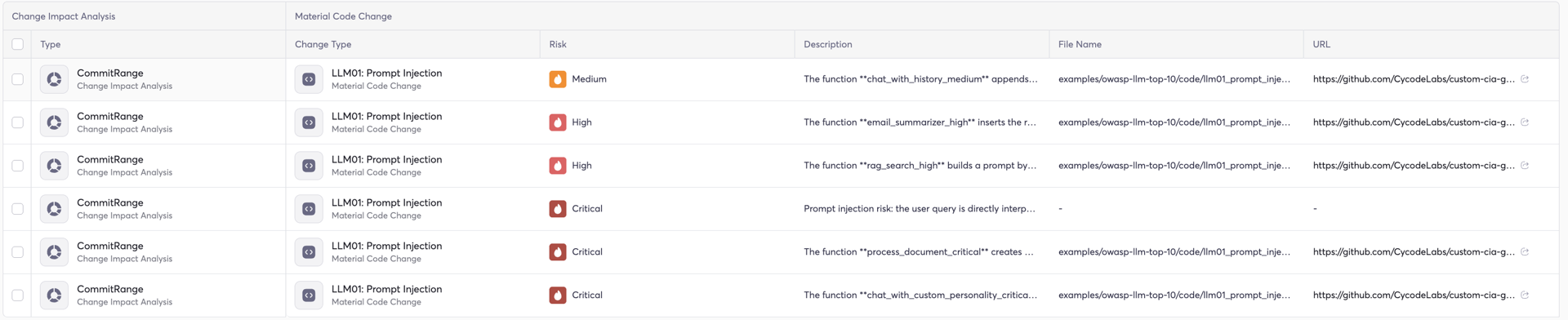

Change Impact Analysis — AI-powered classification of code changes. A fundamentally different engine that semantically understands every pull request and commit.

Let’s walk through each one.

SAST Policies: Catching LLM Vulnerabilities in Your Code

Cycode’s AI Security module includes SAST policies built on the Bearer engine that perform deep semantic analysis of LLM API calls. These aren’t generic code quality checks — they’re designed to catch the vulnerability patterns that the OWASP Top 10 for LLM Applications specifically calls out.

Here are three examples of what these policies detect:

Example 1: User Input in Privileged LLM Instructions

What it detects: Untrusted user input being placed into high-authority LLM instruction channels — such as the developer role, instructions, or additional_instructions fields in OpenAI and other LLM API calls. This is how prompt injection vulnerabilities are introduced at the code level.

Why it matters: In OpenAI-style conversation hierarchies, the developer and system roles carry higher authority than regular user messages. When attacker-controlled text is inserted into these privileged channels, it can override intended behavior, bypass guardrails, and steer the model toward unsafe actions — including invoking tools it shouldn’t have access to. This maps directly to LLM01:2025 — Prompt Injection and CWE-1427: Improper Neutralization of Input Used for LLM Prompting.

What this looks like in code:

# ❌ VULNERABLE: User input flows directly into the system prompt

from openai import OpenAI

client = OpenAI()

user_preference = request.args.get("preference")

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "developer", "content": f"You are a helpful assistant. User preference: {user_preference}"},

{"role": "user", "content": "What should I buy?"}

]

)

# ✅ SAFE: User input stays in the user message; system prompt is static

from openai import OpenAI

client = OpenAI()

SYSTEM_PROMPT = "You are a helpful shopping assistant."

user_preference = request.args.get("preference")

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "developer", "content": SYSTEM_PROMPT},

{"role": "user", "content": f"My preference is: {user_preference}. What should I buy?"}

]

)

The key distinction: user-supplied data should never enter developer, system, or instructions fields. Keep those channels static and treat all user input as untrusted.

Example 2: User-Controlled AI API Consumption Parameters

What it detects: Application code that allows user input to directly control OpenAI API consumption parameters such as max_output_tokens, max_tokens, or logit_bias. This creates an unbounded consumption vulnerability where attackers can manipulate resource usage.

Why it matters: When users can set their own token limits or manipulate logit bias values, they can force excessively large API responses (driving up costs), slow down response times for other users, or exhaust rate limits — effectively creating a denial-of-service condition through your own API budget. This maps to LLM10:2025 — Unbounded Consumption and CWE-770: Allocation of Resources Without Limits or Throttling.

What this looks like in code:

# ❌ VULNERABLE: User controls token limits directly

from openai import OpenAI

client = OpenAI()

max_tokens = int(request.args.get("max_tokens", 1000))

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": user_query}],

max_tokens=max_tokens # Attacker can set this to 100,000+

)

# ✅ SAFE: Server enforces a maximum, capping user-supplied values

from openai import OpenAI

client = OpenAI()

MAX_ALLOWED_TOKENS = 2000

user_requested = int(request.args.get("max_tokens", 1000))

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": user_query}],

max_tokens=min(user_requested, MAX_ALLOWED_TOKENS) # Hard server-side cap

)

Always enforce server-side limits on consumption parameters. Never pass user-controlled values directly to API configuration fields.

Example 3: Missing OpenAI safety_identifier on LLM Requests

What it detects: OpenAI API calls that are missing a safety_identifier — a stable per-user identifier (such as a hashed username or email) that OpenAI uses to attribute activity to individual end-users and detect abuse.

Why it matters: Without a safety_identifier, you lose the ability to trace abuse back to specific users. If someone uses your application to generate harmful content or trigger policy violations, OpenAI can’t provide actionable feedback — or take targeted enforcement — tied to specific accounts. OpenAI may even block the associated safety_identifier entirely from API access in high-confidence abuse cases, which only works if you’re sending one. This is a monitoring and auditability gap that makes incident response significantly harder. Maps to A09:2021 — Security Logging and Monitoring Failures and CWE-778: Insufficient Logging.

What this looks like in code:

# ❌ VULNERABLE: No user attribution on the API call

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": user_query}]

)

# ✅ SAFE: Includes a hashed user identifier for traceability

from openai import OpenAI

import hashlib

client = OpenAI()

user_hash = hashlib.sha256(current_user.email.encode()).hexdigest()

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": user_query}],

safety_identifier=user_hash # Enables abuse detection and per-user monitoring

)

This is a low-effort, high-value fix. Adding a hashed identifier takes one line and dramatically improves your ability to detect and respond to abuse.

These are just a few examples — our SAST detection for LLM-specific vulnerabilities continues to expand as new attack patterns emerge and the OWASP LLM Top 10 evolves.

Secret Policies: Do You Know Where All Your AI API Keys Are?

Every AI integration starts with an API key. With the explosion of AI adoption — both sanctioned and shadow — most organizations now have keys for multiple providers scattered across repos, config files, and CI/CD pipelines. The question isn’t whether your teams have AI API keys. It’s whether you know where they all are.

Cycode’s AI Security module includes dedicated secret detection policies for AI provider API keys across the major providers and platforms your teams are actually using — including OpenAI, Anthropic, Google Gemini, Azure OpenAI, Hugging Face, Cohere, and more.

These aren’t generic regex patterns. Each policy is tuned to the specific key format and entropy profile of each AI provider, reducing false positives while ensuring comprehensive coverage across your codebase, config files, and pipeline definitions.

SCA Policy: The AI Dependencies Nobody’s Watching

AI/ML applications rely on a deep stack of specialized packages — model frameworks like transformers and torch, embedding libraries, vector databases like chromadb, orchestration tools like langchain, and inference runtimes. These packages are just as susceptible to CVEs as any other dependency, but they’re often overlooked in traditional SCA results because security teams haven’t been trained to look for them.

The SCA policy identifies known vulnerabilities (CVEs) in AI and ML packages across your repositories. Each SCA violation includes:

-

The CVE identifier and severity (CVSS score)

-

The affected package and version

-

The manifest file where the dependency is declared (requirements.txt, package.json, pom.xml, etc.)

-

A recommended fix version when available

Risk scores reflect both the CVE severity and the AI-specific deployment context — because a remote code execution vulnerability in a model-serving library carries different real-world risk than the same CVE score in a logging utility.

Change Impact Analysis: Detecting What Static Rules Can’t

The SAST, Secrets, and SCA policies described above are all rule-based detection — they scan your codebase for known patterns, key formats, and vulnerable package versions. They’re fast, precise, and effective for well-defined issues.

But some of the most dangerous AI security risks can’t be captured by static rules. They’re semantic — they depend on what the code does in context, not what it looks like syntactically.

Can a regex tell you that a pull request just introduced a path where LLM output flows into a shell command? Can a SAST rule catch that someone removed a rate limit on an AI API endpoint? These are the risks the OWASP Top 10 for LLM Applications describes — and they’re precisely the ones that slip through traditional scanners.

This is where Change Impact Analysis (CIA) comes in.

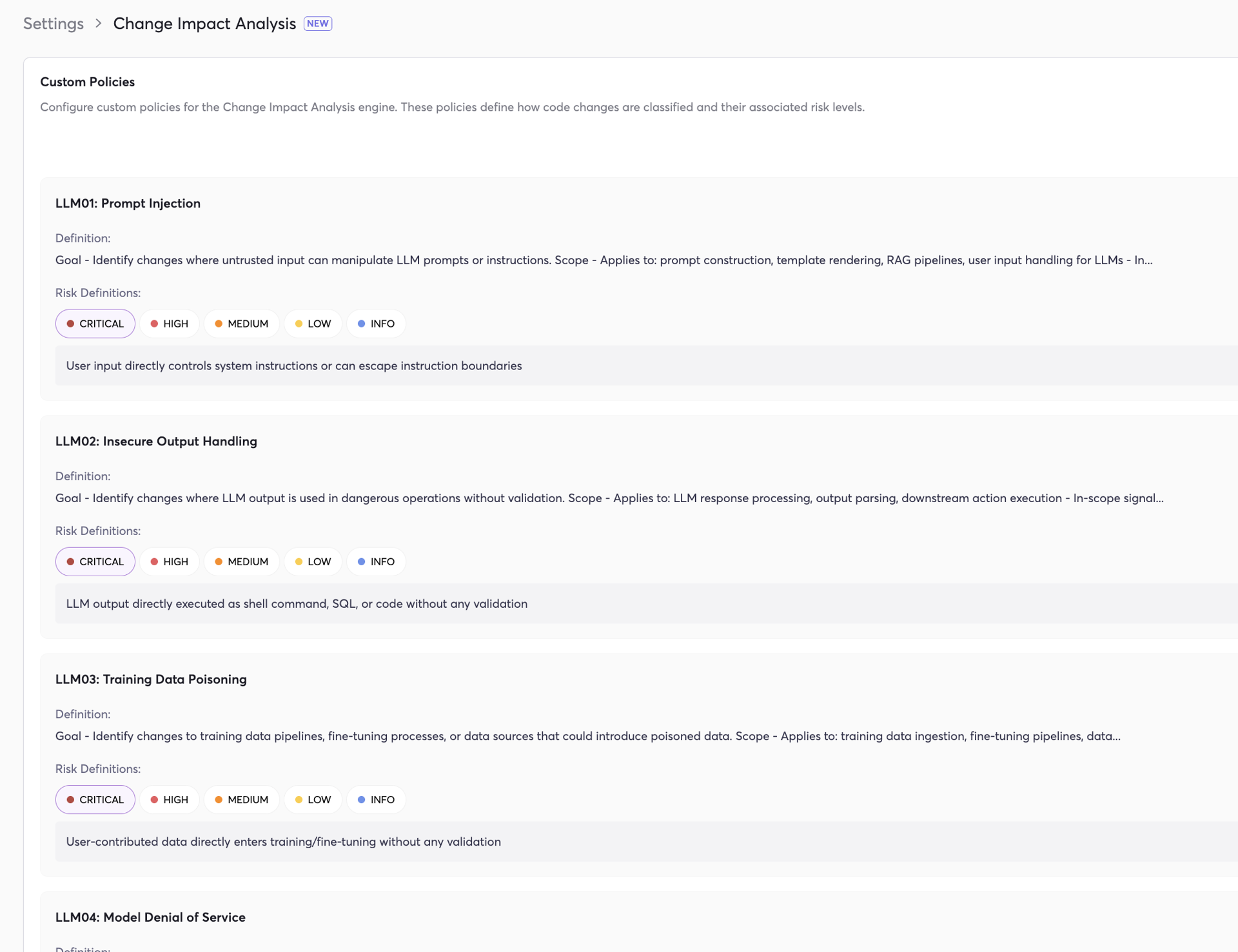

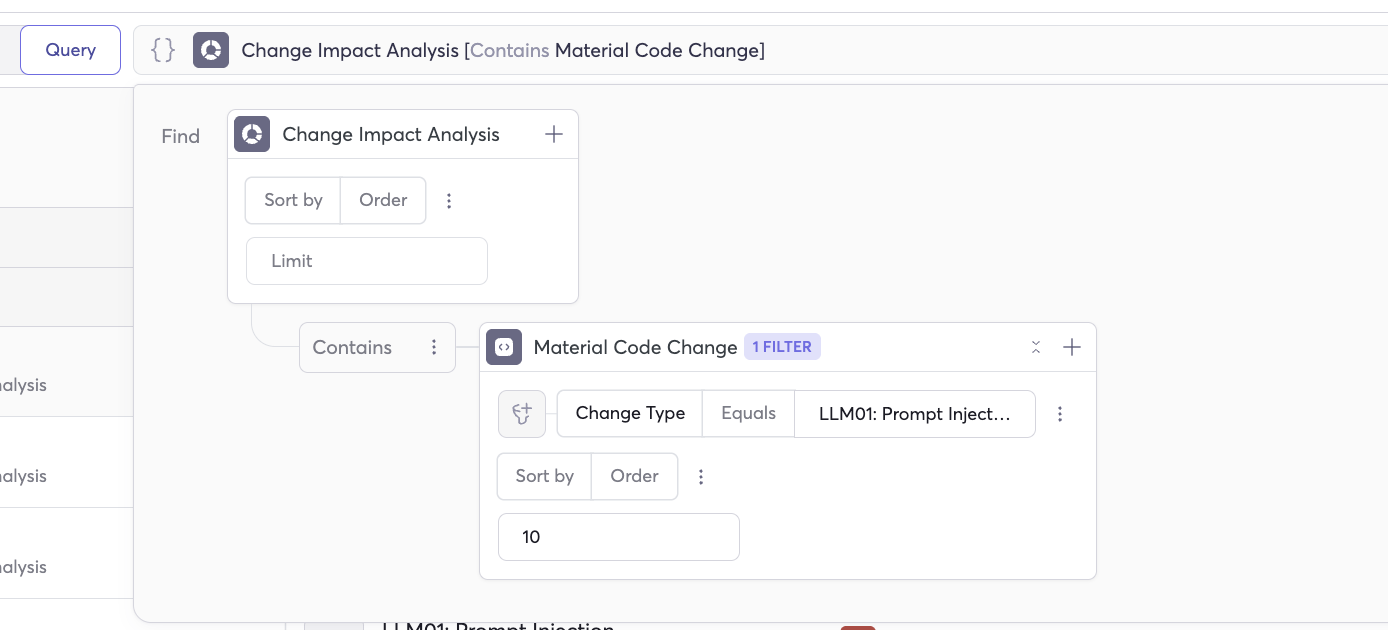

CIA is a fundamentally different engine. It’s not pattern-matching — it’s an AI-powered analysis engine that reads and understands every code change (pull requests, commits) and classifies its security impact based on configurable rules. Think of it as a security-aware code reviewer that never sleeps, never skims, and evaluates every change against your defined risk policies.

How CIA Works

Each CIA policy is fully configurable. You define a type of change (e.g., “LLM02: Insecure Output Handling”), a policy goal (e.g., “Identify changes where LLM output is used in dangerous operations without validation”), concrete code examples the engine should match, and graduated risk definitions from CRITICAL to INFO — each with a clear description of what qualifies at that severity.

You control what the engine looks for and how it classifies what it finds.

OWASP Top 10 for LLM Coverage

One of the most impactful use cases for CIA is creating policies that map directly to the OWASP Top 10 for LLM Applications (2025). These LLM-specific risks — prompt injection, insecure output handling, training data poisoning, excessive agency, unbounded consumption — are notoriously difficult to detect with traditional static analysis precisely because they’re context-dependent.

Why This Matters

This is the key advantage of CIA for AI security: it closes the gap between what static rules can detect and what the OWASP LLM Top 10 describes. The risks are real and well-documented — and now you have an engine capable of detecting them at the code change level, before they ever reach production.

Change Impact Analysis: A Different Kind of Engine

The SAST, Secrets, and SCA policies described above are all rule-based detection — they scan your codebase for known patterns, key formats, and vulnerable package versions. They’re fast, precise, and effective for well-defined issues.

But some AI security risks can’t be captured by static rules. They’re semantic — they depend on what the code does in context, not what it looks like syntactically.

This is where Change Impact Analysis (CIA) comes in. CIA is a fundamentally different engine — it’s not pattern-matching, it’s an AI-powered analysis engine that reads and understands every code change (pull requests, commits) and classifies its security impact based on rules.

CIA is a general-purpose engine. You can define policies for any security concern. Each policy includes a definition (what to look for), a scope (where it applies), and graduated risk levels from CRITICAL to INFO.

Applying CIA to AI Security: OWASP Top 10 for LLM Coverage

One of the most impactful use cases for CIA is creating custom policies that map to the OWASP Top 10 for LLM Applications (2025). These LLM-specific risks — prompt injection, insecure output handling, training data poisoning, excessive agency, and others — are notoriously difficult to detect with traditional static analysis precisely because they’re context-dependent and semantic in nature.

CIA gives you the tools to build them yourself, tailored to your organization’s specific AI usage patterns. For example, you can create a policy that identifies code changes where untrusted input flows into LLM prompt construction, or a policy that flags changes where LLM output is passed to shell execution or database queries without validation, or a policy that detects when resource controls on AI API usage (rate limits, token budgets) are weakened or removed.

Each policy you create defines what “CRITICAL” vs. “HIGH” vs. “MEDIUM” looks like for your organization — because the risk of a prompt injection in an internal productivity tool is very different from one in a customer-facing financial advisor.

This is the key advantage of CIA for AI security: it closes the gap between what static rules can detect and what the OWASP LLM Top 10 describes. The risks are real and well-documented — and now you have an engine capable of detecting them at the code change level, before they ever reach production.

Getting Started

AI Security is available now in the Cycode platform. Here’s how to get started:

-

See your current exposure. Navigate to Violations → AI Security to see all AI-related findings across your repositories. This gives you an immediate snapshot of your AI security posture.

-

Review and triage your highest-risk findings. The AI Security view is sorted by risk score by default. Start with your CRITICAL and HIGH findings that need immediate attention.

-

Enable Change Impact Analysis. Go to Settings → Change Impact Analysis to activate CIA policies for your repositories. Start by creating OWASP LLM policies.

-

Build custom policies for your AI governance needs. Use the Knowledge Graph to define rules that match your specific AI adoption patterns — shadow AI inventory, compliance gates, team-level risk tracking.

-

Integrate into your existing workflows. AI Security violations flow into the same triage, assignment, and remediation workflows you already use for every other Cycode finding. No new tools to learn, no new dashboards to check.

What’s Next

This launch is just the beginning of our AI security roadmap.

AI adoption isn’t slowing down — and neither should your ability to secure it. Schedule a demo to see AI Security in action, or log in to your Cycode dashboard and navigate to AI Security to explore your findings today.