Developers are adopting AI coding assistants, connecting MCP servers, pulling in AI models and packages, spinning up AI infrastructure, and embedding API keys for AI services — often without security ever knowing. The AI toolchain is expanding fast, and most organizations lack the visibility to track what’s being used, let alone whether it’s been vetted or approved.

Without governance, the risks pile up fast: unauthorized tools expand your attack surface, sensitive data flows through unvetted services, AI API keys leak into repos, and compliance teams are left blind.

AI governance closes that gap — a continuous process for discovering what AI is in your environment, deciding what’s allowed, and enforcing those decisions where developers work. Not blocking AI, but making adoption safe and auditable.

At Cycode, we approach AI governance in three layers: see everything, govern and manage, and enforce where it matters. Here’s how.

Step 1: See Everything — AI Inventory as the Foundation

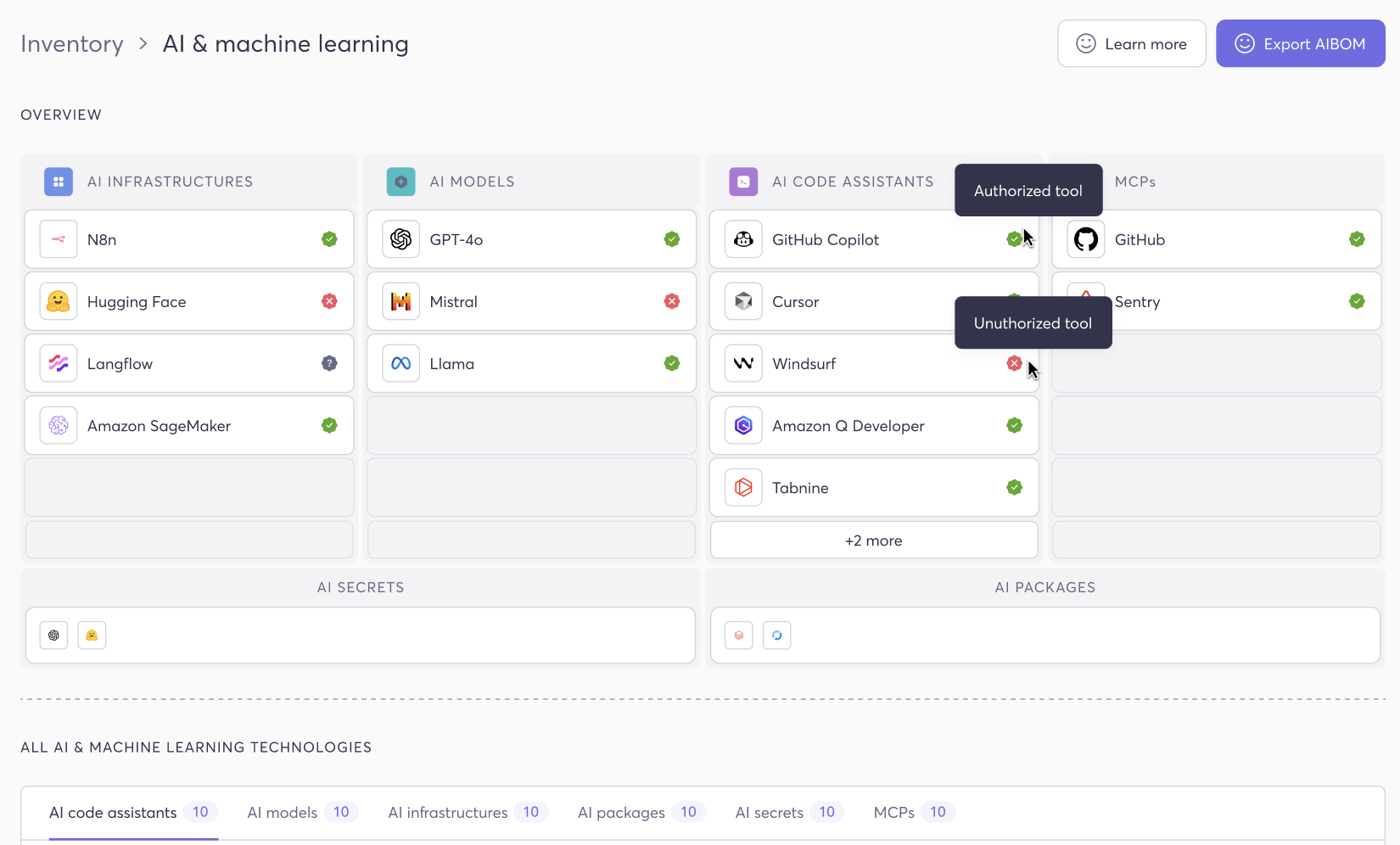

You can’t write a governance policy for tools you don’t know exist. That’s why the first pillar of Cycode’s AI Governance is a comprehensive, continuously updated inventory of every AI and machine learning technology in your environment.

Cycode automatically discovers and catalogs AI tools across six categories: AI code assistants like GitHub Copilot, Cursor, and Tabnine; AI models such as GPT-4o, Mistral, and Llama referenced in your codebase; AI infrastructure platforms like Hugging Face, Langflow, and Amazon SageMaker; MCP servers connected to developer environments; AI secrets including API keys and tokens for services like OpenAI, Anthropic, and Gemini; and AI packages and ML dependencies pulled into your applications.

This inventory isn’t a one-time snapshot — it’s a live, continuously updated view that gives AppSec teams a clear picture of what AI tools are in use across the organization, helping them stay in control and make informed governance decisions.

Think of it as your AI Bill of Materials (AIBOM): a living, exportable map of every AI component your organization touches.

Without this foundation, everything else — policies, enforcement, compliance — is guesswork.

Step 2: Govern and Manage — Authorization Workflows That Scale

Visibility is the prerequisite. Governance is where it gets real.

Once Cycode surfaces a new AI tool in your environment, the next question is simple but critical: Is this tool authorized?

The Three-State Authorization Model

Every AI tool Cycode discovers is assigned one of three authorization states:

Needs Review — This is the default state when a new tool is first detected. It signals to the security team that a new AI technology has entered the environment and requires evaluation. No assumptions are made; the tool is flagged for attention.

Authorized — After review, the security team can mark a tool as approved for use. This means the tool has passed your organization’s evaluation criteria — whether that includes security review, legal clearance, compliance checks, or all of the above.

Unauthorized — If a tool fails review, or your organization has decided it’s not permitted, it’s marked as unauthorized. This is where governance becomes enforcement.

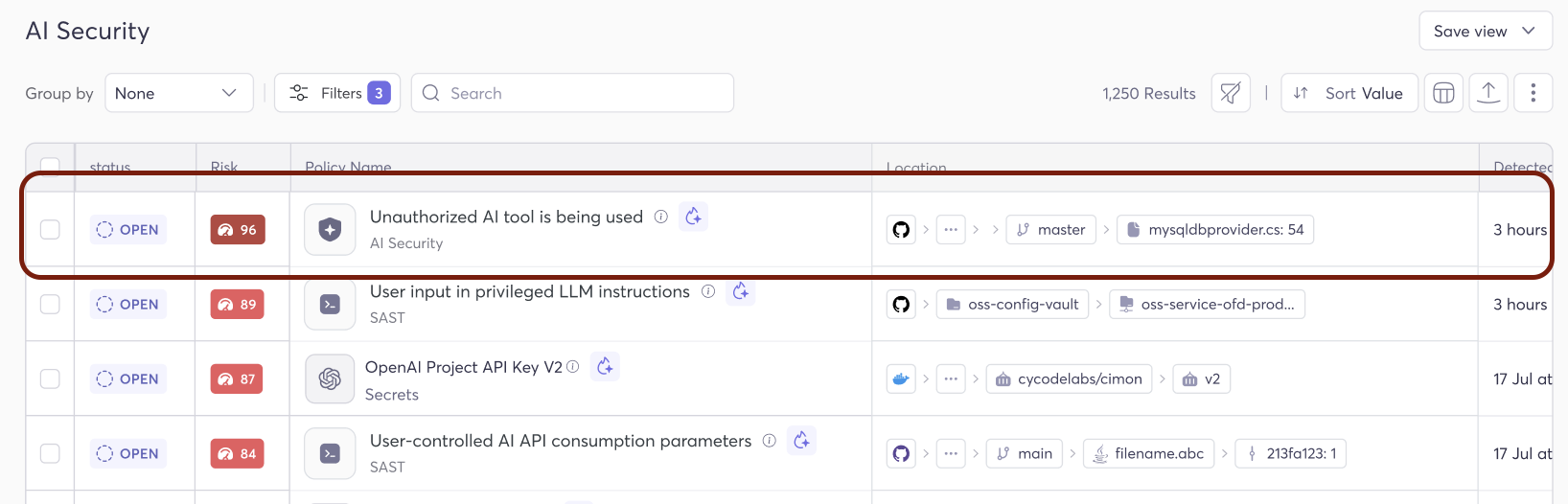

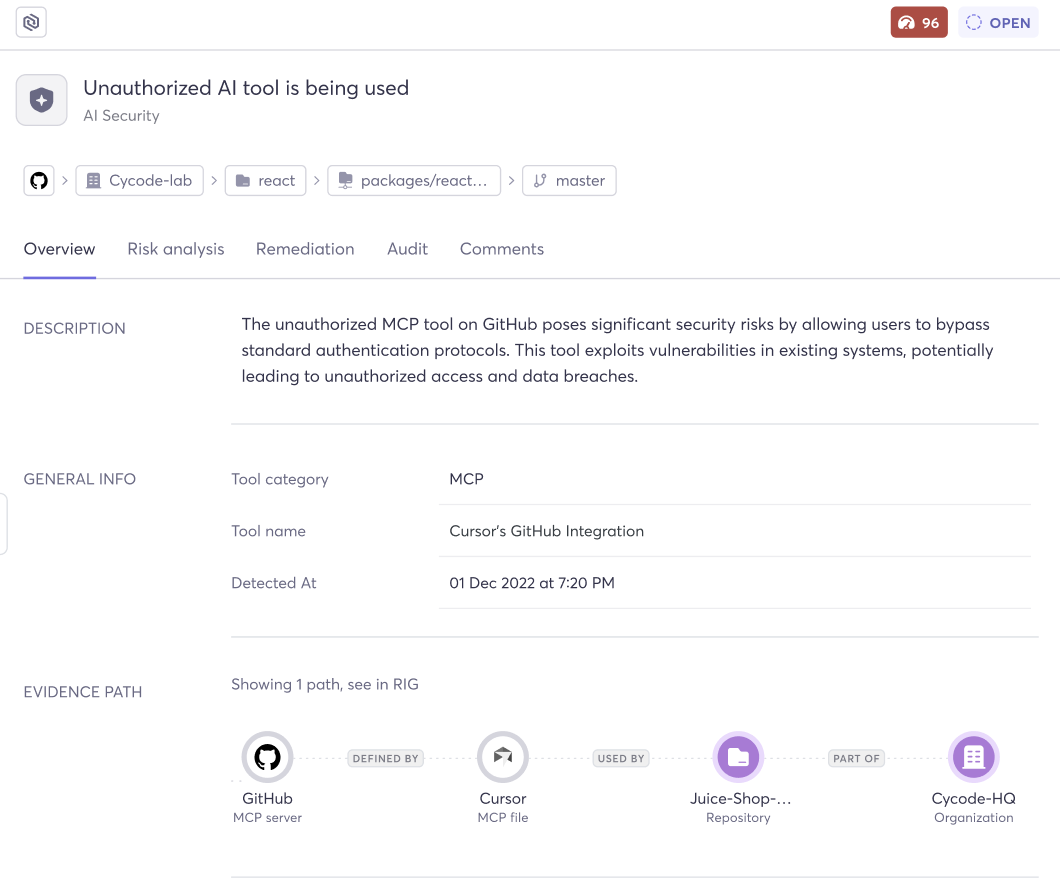

What Happens When a Tool Is Unauthorized

Marking a tool as “Unauthorized” isn’t just a label — it’s an active governance mechanism. From that point forward, every time Cycode detects usage or configuration of that unauthorized tool anywhere in your environment, it automatically generates a violation: “Unauthorized AI tool is being used.”

Each violation comes with full context:

-

Critical risk score — unauthorized tool usage is flagged as critical severity, signaling that it requires immediate attention

-

The tool — exactly which unauthorized AI technology was detected

-

The evidence path — a clear chain showing where and how the tool was detected in your environment

-

Metadata — detection timestamps, tool categories, and additional labels for custom workflows

This transforms AI governance from a periodic audit exercise into a continuous, automated enforcement loop. Your security team doesn’t need to chase developers or run manual checks. The platform does the work and surfaces violations with the context needed for fast triage and resolution.

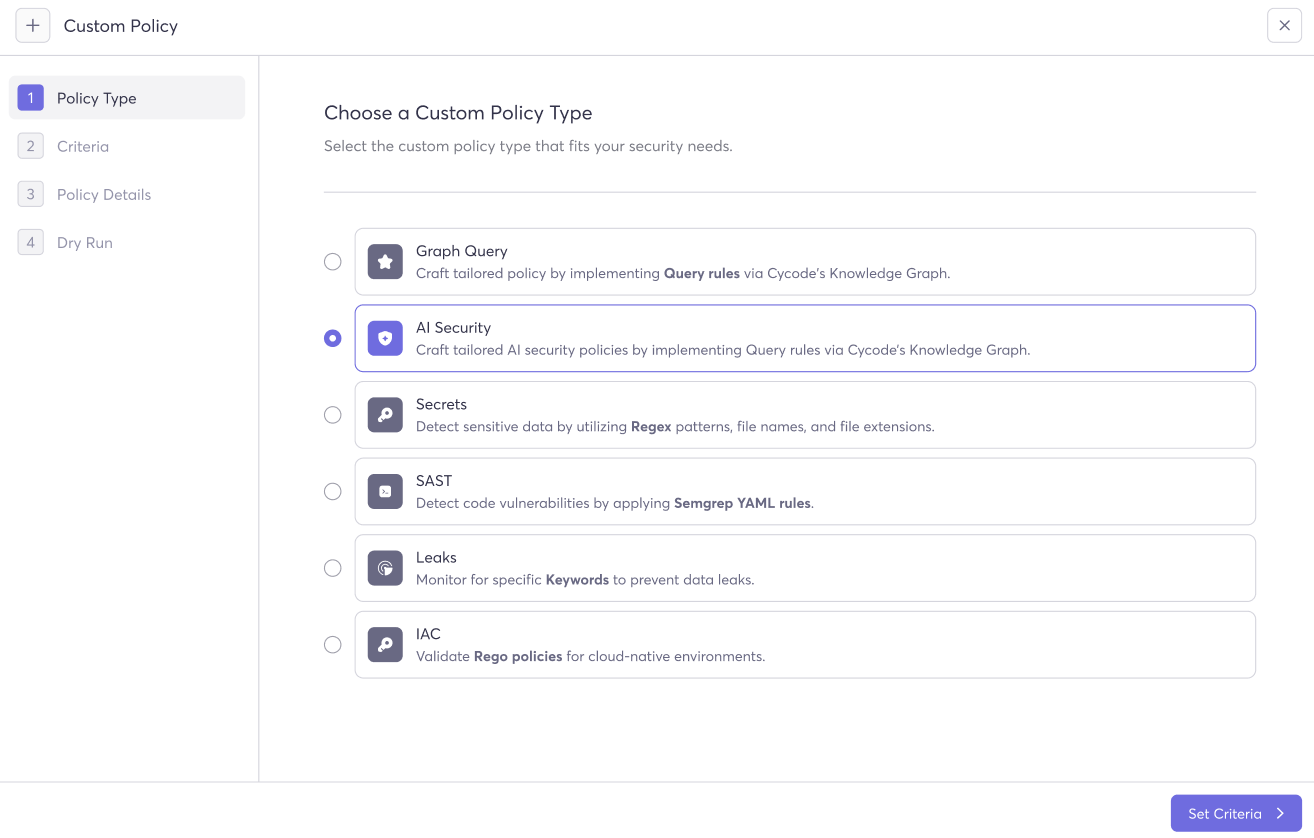

Step 3: Custom Policies — Your AI Governance, Your Rules

Predefined policies cover the common cases, but every organization’s AI adoption looks different. A fintech company embedding LLMs in financial advisory workflows has very different risk tolerances than a media company using them for content summarization. Real-world AI governance requires the ability to define custom rules based on your specific context.

The AI Security module supports Custom Policies built using Cycode’s Knowledge Graph — a queryable graph of your entire technology inventory, code dependencies, and associated violations.

How It Works

The Knowledge Graph lets you traverse relationships between entities to surface AI-specific risks that predefined policies can’t capture. For example:

-

Shadow AI inventory — Surface unauthorized AI adoption before it becomes a compliance issue

-

Unapproved models/MCPs — Detect usage of AI models or MCP servers that aren’t on your organization’s approved list

-

AI in customer-facing apps — Identify repositories with AI dependencies that are deployed to production customer-facing services

-

Team-level AI risk — Enable risk-based conversations with engineering leadership

-

AI dependency hygiene — Focus remediation efforts on the AI components that matter most

Custom policy violations appear in the AI Security view alongside all other findings, fully integrated with triage, assignment, and remediation workflows. No separate dashboards. No context-switching.

Practical Use Cases

-

Shadow AI inventory — “Which repositories use AI/ML packages that haven’t been approved by security?”

-

AI dependency hygiene — “Which AI packages have known vulnerabilities that haven’t been remediated?”

-

Team-level AI risk — “Which teams have the most AI security exposure?”

-

Compliance rules — “Flag any repository using an AI model-serving framework without an approved security review”

Custom policy violations appear in the AI Security view alongside all other findings, fully integrated with triage, assignment, and remediation workflows.

Step 4: Enforce at the Developer Surface — MCP Guardrails

Visibility and management answer the question “what’s happening?” Enforcement answers “what do we do about it?” — ideally before the damage is done.

This is where Cycode is heading next with our IDE hooks, starting with support for Cursor and Claude Code, with more to come.

Why MCPs Demand Special Attention

MCP servers represent a uniquely dangerous vector in the AI-powered development environment. Unlike a traditional IDE plugin that might suggest code completions, an MCP server can execute commands, call APIs, access databases, read files, and interact with external services — all triggered by natural language prompts within a developer’s workflow.

The risks are well-documented and growing. Attackers can embed malicious instructions in MCP tool descriptions that agents interpret as legitimate commands (tool poisoning), distribute compromised MCP servers through community registries that turn malicious only after gaining widespread adoption (supply chain attacks), exploit the broad permission scopes MCP servers typically request to move laterally across connected services (privilege escalation), and use agents communicating with multiple MCP servers to bridge network boundaries and exfiltrate data. These aren’t theoretical risks — they’re documented incidents.

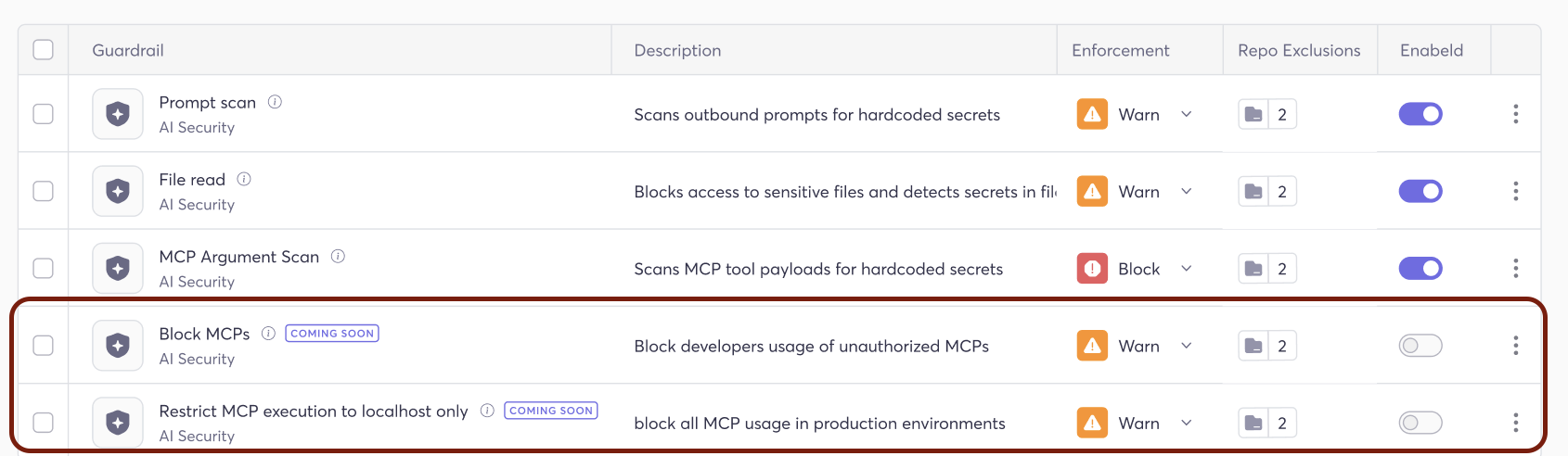

Two New Guardrails for MCP Governance

Cycode is introducing two new AI security guardrails designed to enforce MCP governance directly in the developer environment:

-

Block Unauthorized MCPs

When an MCP server is marked as unauthorized in Cycode’s inventory, this guardrail prevents developers from actually using it. Rather than relying on a violation after the fact, the hook intercepts the connection attempt at the IDE level, blocking execution before any data can be accessed or exfiltrated.

This closes the loop between governance decisions and developer reality. Your security team decides what’s allowed; the hook enforces it where it matters — in the tool the developer is actually using.

-

Restrict MCP Execution to Localhost Only

This guardrail gives security teams a middle ground between full access and full block. For MCPs that are permitted but carry risk when connecting to remote environments, teams can restrict their execution to localhost only — allowing developers to use them locally while preventing any interaction with production or remote infrastructure.

A local MCP server operating within a developer’s sandbox is a fundamentally different risk profile than one executing commands against production systems. This guardrail lets security teams make that distinction on a per-MCP basis, choosing which servers to block entirely and which to allow under localhost-only constraints.

Together, these two guardrails give security teams a flexible enforcement toolkit: block unauthorized tools outright, or allow specific MCPs with restricted execution scope — all enforced directly in the developer’s IDE.

The Bigger Picture: AI Governance as a Platform Capability

These features — inventory, authorization workflows, violation detection, and IDE-level enforcement — don’t exist in isolation. They’re powered by the Cycode platform’s context graph, which maps business context, ownership, exposure paths, and root cause across your entire software factory.

That means when a violation fires for an unauthorized AI tool, it’s not just an alert — it’s enriched with who owns the repository, which team introduced the tool, how it connects to other systems, and what the potential blast radius is. This is what turns governance from a checkbox exercise into an operational capability that scales.

AI governance isn’t about saying “no” to AI. It’s about saying “yes” with confidence — knowing exactly what’s in your environment, who approved it, and what happens when something falls outside the lines.

Ready to take control of AI across your development environment? Get a demo and see how Cycode’s AI Governance gives you full visibility, management, and enforcement — from code assistants to MCPs, models to secrets.