If you asked me to list the three most frustrating things I experience as a software developer, I would probably say:

- Performing manual repetitive tasks

- Long (and usually unstable) test runs

- The backlog

While the first two items are quite straightforward, you might ask yourself why I am so frustrated with backlogs.While in theory the backlog is a list of known issues for tackling later, in practice I find that the backlog usually becomes an “unpopular task abyss” into which we throw all of the “non-critical issues” we find.

But due to the never-ending roadmap and feature requests, we don’t really know when (and if) we’ll ever get to cleaning up that mess. Instead, we find ourselves opening a “Refactor X” JIRA and throwing it into some “Technical Debt” epic. (There is a quite heavily discussed “Zero bug policy” topic that I am fond of, but this can be a subject for a future blog post).

Are we destined to keep coding features or debugging issues with poorly written classes or needlessly complicated flows?

The Solution: A Quality Sprint

As developers, we need to understand and live with the Quality vs. Quantity tradeoff. So while we can’t just sprint forward without stopping to see that our platform still works properly, we also can’t refactor our current code all of the time without adding value to our customers.

A good solution in my opinion is a Quality Sprint / Quality Week. These are dedicated timeframes during which everyone on the engineering team stops their day-to-day work and focuses on fixing the development pains that hold us back and cause us frustration.

A quality sprint starts with the development team going over the “backlog” and choosing the issues that trouble them most. It could be things like adding test coverage to flows that break often, refactoring specific classes that have become too hard to read or enhancing monitoring capabilities to be better prepared for future debugging.

Once the tasks are chosen, they are distributed throughout the team. It’s best to give people the tasks that they created about issues that bother them most. I also recommend making sure that the tasks are feasible and can be finished in the proper time frame, so don’t choose a huge refactor that you can’t finish or properly test.

After the sprint is done, the team goes back to their daily work, but the backlog is cleaner and the code is tidier and performing better.

This is exactly what we did at Cycode. In the following sections, I’d like to tell you what we did during our Quality Sprint and our achievements.

Quality Sprint Targets

After some internal brainstorming and discussions, we decided our Quality Sprint would focus on:

- Performance

- Internal Monitoring (for Developer on-call)

- Tests

These were the most pressing topics we felt needed to be tackled and could ensure highly-effective and quick wins. Here’s what we achieved with each one.

Quality Sprint Achievements

Performance

A quality sprint is the perfect occasion to drill into your monitoring systems and understand its current pain points. In our case, the purpose was mainly to go over our longest Postgres queries, API routes with high latency and other “low hanging fruits”. These were issues we knew we could handle once we had the time and focus. The most important part of this issue was the overdue .NET runtime upgrade from 3.1 to 5.

While we as developers always want to be using the most cutting edge technologies and versions, we didn’t choose this task just to be able to say we use the latest version (although this is important as well). Rather, the main benefit for us was the Regex Performance Improvements in .NET 5, which we use quite heavily in some of our services.

The upgrade itself wasn’t too complicated and other than several “nullability” and “serialization” issues that occurred in the new runtime, the change went quite smoothly. We have yet to run proper tests to see the actual performance boost we received from the upgrade, but are planning to do it soon enough.

The main tip I can share regarding version upgrades is run tests, and a lot of them.

This will make you a lot more confident that you didn’t break anything. Another tip is to save the version configuration in a single location. We have a single base Docker image and a single base .csproj template, which all of the other images/projects import. This makes the actual version upgrade a change in a single location (which is also very helpful for reverts if needed). Sounds trivial, but easier said than done. Here is an example of our base .csproj file and how it is used by our other services.

Base .csproj file (CommonProject):

<Project>

<PropertyGroup>

<TargetFramework>netcoreapp3.1</TargetFramework>

<Nullable>enable</Nullable>

<TreatWarningsAsErrors>true</TreatWarningsAsErrors>

</PropertyGroup>

...

...

Some other project file:

<Import Project="..\Cycode.Common\Cycode.Common.Data\CommonProjectFile\CommonProject" />

Internal Monitoring

In Cycode, we believe in full developer ownership. This means that the developer is responsible for designing, implementing and monitoring their own features. We have a main Slack channel to which our critical errors are sent and more domain-specific channels for less critical but still important issues. We’ve also built multiple dashboards that allow us to see problematic trends in our different flows, and when we see problematic trends, we know we need to act.

As part of the quality sprint we added dashboards and alerts for non-trivial parameters, such as Kafka topic lags and 3rd party API rate limits. These are areas in which we were blind and could only detect after issues had already occurred.

In addition, we added automation to certain tasks that we used to perform manually. For example, adding Mongo indexes to collections is something that we did only once in our SaaS environment. Now that we are expanding to on-premise environment support, doing these things manually is not good enough.

This is classic startup mentality. Run fast and do only what you need to do. The moment you see that it starts hurting you, take the time and effort to automate the process.

Tests

As most of the people who have worked with me know, I am really passionate about tests. Other than coding confidence and unimaginable time saving, the mere fact that you can fix a bug and then write a test that will make sure that the issue will never happen again was what really made me a test fanatic.

Just several months after we sealed our first paying customer I insisted that we need a proper integration tests framework as part of our CI process. Although it seems trivial now, it wasn’t easy to convince everyone on a team of six developers to allow ⅙ of its developing effort to write a test infrastructure for our barely existing functionality.

As you can imagine, the first prototype of the integration tests mechanism wasn’t efficient. Even though we started with a microservice approach from the beginning and could easily identify which service had changed in a specific commit, we still ran ALL of our tests in every CI execution. Moreover, we had issues running the tests in parallel (XUnit issues) and after several hours of investigation we have decided that it is OK for now and “we’ll handle it when it is really needed” (sound familiar?)

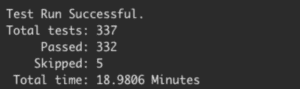

Fast-forward a year, and our integration test job contains ~330 tests and takes ~20 minutes to pass successfully. While this might sound acceptable for some developers, our development team is growing and having a test infrastructure that takes longer to run for each added test will soon become a real pain.

As a side note, I have seen CI flows that took an hour and a half to complete, and to top it off weren’t stable. You can’t really explain in words the feeling of waiting 90 minutes for your CI to pass only to see that a (maybe) non-related test failed and you need to re-run it.

Therefore, we decided to tackle this issue from two angles at the same time.

First, diving into the XUnit issues and making the tests run in parallel (as much as possible). Second, adjusting the infrastructure to only run tests that are related to the service that was changed.

To be honest, we weren’t sure that we could solve both of these issues and hoped that we could at least have a single win that would reduce test durations. But it seems that working on issues that really bother you can take your skills to the next level, and our great developers were able to seal both wins.

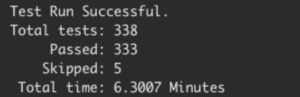

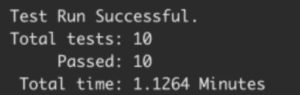

Bottom line, running the entire test suite now takes six minutes. In addition, changing a specific service will only run the tests that are related to that service, which can take less than a minute for specific services. And of course, there is nothing more satisfying than seeing the numbers.

Running all tests before the change:

Running all tests after the change:

Changing a specific service:

We still have multiple action items in order to reduce these six minutes even more, but you can’t be too greedy.

I can write a dedicated blog post on how we were able to achieve these results (and maybe I will in the future), but for now I will add two tips for anyone interested:

- XUnit parraerlism doesn’t work well with the .Wait(), .Result and .GetAwaiter().GetResult() methods.

If you need synchronous code (we needed a small part for Setup and Teardown flows), go full synchronous (including synchronous API calls instead of asynchronous ones). - XUnit `maxParallelThreads` setting should be set to unlimited (-1).

Without that setting our tests kept deadlocking.

Other than that, we added integration tests for two flows that we did not cover up until now, and also refactored one of our legacy classes to be a lot more flexible and “up to date”. Overall, an excellent result for only five days of work!

Summing Up the Quality Sprint Experience

The Quality Sprint was a fantastic experience. Overall, we managed to:

- Reduce our integration test execution times dramatically.

- Add integration tests for two flows that we did not cover up until now.

- Refactor one of our legacy classes to be a lot more flexible and “up to date”.

- Enhance our monitoring systems to automatically alert on issues that we could only find manually before.

- Improve our “initialization” process for on-premise environments.

But apart from the important milestones listed above, the main thing I took from the sprint was the passion in which our team tackled the problems. Working on things that really annoy you in your day to day work, things that you have complained about and now have the chance to fix can really raise your creativity and morale.

Moreover, I think that the fact that our development team knows that quality is important and that it’ll have the time to improve the development experience in the future makes them less frustrated in the day-to-day tasks. Therefore, I highly recommend implementing Quality Sprints for your backlog and for your team’s morale.

Feel free to contact us here for questions regarding the problems we faced or if you would like more information regarding our company. We also have some open positions, which you can check out here.